The Ultimate Guide to Subtitling: From Timecodes to Best Practices

In our global, video-driven world, subtitles are more than just text on a screen—they are a vital bridge connecting content to audiences everywhere. But did you know there’s a whole science behind making them effective?

This guide will break down everything you need to know, from the technical basics to the creative nuances of professional subtitling.

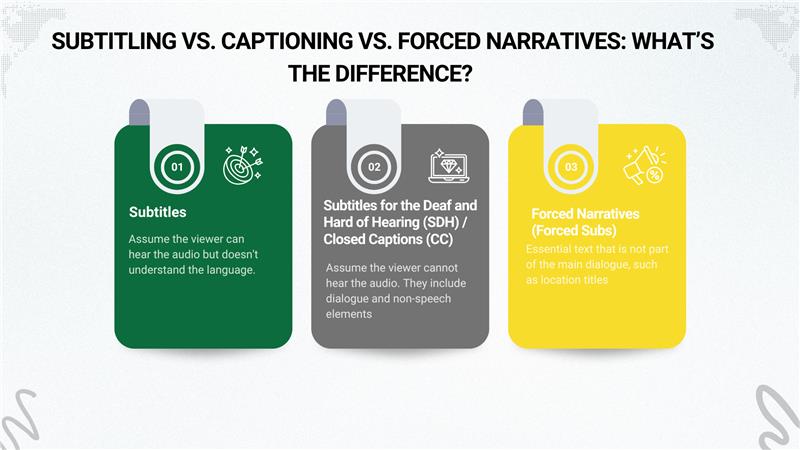

Subtitling vs. Captioning vs. Forced Narratives: What’s the Difference?

First, let’s clarify the types of text you see in video:

First, let’s clarify the types of text you see in video:

- Subtitles: Assume the viewer can hear the audio but doesn’t understand the language. They convey only the spoken dialogue and are typically translated from the original language.

- Subtitles for the Deaf and Hard of Hearing (SDH) / Closed Captions (CC): Assume the viewer cannot hear the audio. They include dialogue and non-speech elements like sound effects ([door creaks]), music cues (♪ tense music ♪), and speaker identification.

- Forced Narratives (Forced Subs): Essential text that is not part of the main dialogue, such as location titles (e.g., MOSCOW – 1985), translated signs, or alien languages that are key to the plot.

Subtitling is a core component of multimedia localization, transforming audio dialogue into synchronized visual text. While it may seem straightforward, it requires a careful balance of technical skill and linguistic artistry.

The Technical Foundation: How Video and Timecode Work

Understanding Frames

A video is a rapid sequence of still images called frames. When played back at a standard speed (like 24 or 30 frames per second, or fps), our brain blends them into smooth motion. Each frame is a unique moment in time.

What is Timecoding?

To pinpoint any single frame, we use timecode. Written in the format HH:MM:SS:FF (Hours, Minutes, Seconds, Frames), it acts as a unique address for every moment in a video.

For example, the timecode 01:25:06:10 refers to 1 hour, 25 minutes, 6 seconds, and 10 frames into a video.

In a subtitle file, you’ll see timecodes used to define exactly when text appears and disappears:

01:26:06:10 –> 01:26:12:15

You’re gonna stay here till you forgive yourself.

Crafting Effective Subtitles: A Best Practices Checklist

Creating professional subtitles isn’t just about typing what’s said. Follow these guidelines to ensure your subtitles are readable, accurate, and unobtrusive.

Creating professional subtitles isn’t just about typing what’s said. Follow these guidelines to ensure your subtitles are readable, accurate, and unobtrusive.

1. Formatting & Appearance

- Font: Use a clear, sans-serif font like Arial or Helvetica.

- Size: Use a size that is legible on all screens (typically between 32-47 pixels, depending on resolution).

- Color: White text with a black outline or drop shadow is standard, ensuring visibility against any background.

- Position: Keep subtitles within the “title safe” area to avoid being cut off on different screens.

- Line Length: Maximum of 47 characters per line and no more than 2 lines on screen at once.

2. Timing & Duration

- Sync: Subtitles must appear and disappear precisely with the spoken word.

- Minimum Display Time: At least 5 seconds for very short utterances (e.g., “Okay!”).

- Maximum Display Time: No longer than 7 seconds. The ideal reading speed is around 160-180 words per minute.

- The 6-Second Rule: A full two-line subtitle (about 14-16 words) needs approximately 5-6 seconds on screen to be comfortably read and processed.

3. Punctuation & Grammar

- Use line breaks logically, breaking at natural linguistic points (e.g., at a clause or phrase boundary).

- Italics are used for off-screen narration, dreams, lyrics, and foreign words.

- Use an ellipsis (…) to indicate a pause within a thought or trailing speech.

- Use a double hyphen (—) to indicate an interruption or abrupt cut-off in dialogue.

- Avoid periods (.) at the end of subtitles in most cases, as they can make the text feel too final. Use question marks and exclamation points as needed.

4. Representing Sound & Context

- Sound Effects: Describe crucial sounds in lowercase italics in brackets: (glass shattering), (distant siren).

- Music: Indicate song lyrics with italics and music notes: ♪ Hello darkness, my old friend ♪.

- Speaker Identification: If it’s not clear who is speaking, identify the speaker in capitals followed by a colon: DOCTOR: The results are in.

- Unclear Audio: Use labels like (muffled) or (indistinct chatter).

5. Handling Foreign Language

- Untranslated: For a known word or phrase, italicize it: *Bonjour!*

- Translated with Attribution: When translation is needed, indicate the language: [IN GERMAN] Good day.

- Color-Coding: Sometimes different colors are used for different speakers or languages.

Common Subtitle File Formats

Subtitles are created in a simple text file that is separate from the video. Common formats include:

- .SRT (SubRip): The most universal and widely supported format.

- .VTT (WebVTT): The standard for HTML5 video on the web.

- .ASS/.SSA (Advanced SubStation Alpha): Supports advanced styling and formatting.

- .TTML (Timed Text Markup Language): An XML-based format for broader distribution.

Software to Get Started

You can create subtitles with everything from a simple text editor (for SRT files) to dedicated software. Popular options include:

- Free/Open Source: Subtitle Workshop, Aegisub

- Professional: WinCAPS, Spot, Ooona, EZTitles

The Takeaway

Subtitling is a dynamic field that blends technical precision with creative translation. Great subtitles feel seamless, allowing the viewer to forget they’re reading and become fully immersed in the story.

By mastering these fundamentals, you’re well on your way to creating subtitles that are not just accurate, but truly enhance the viewing experience.